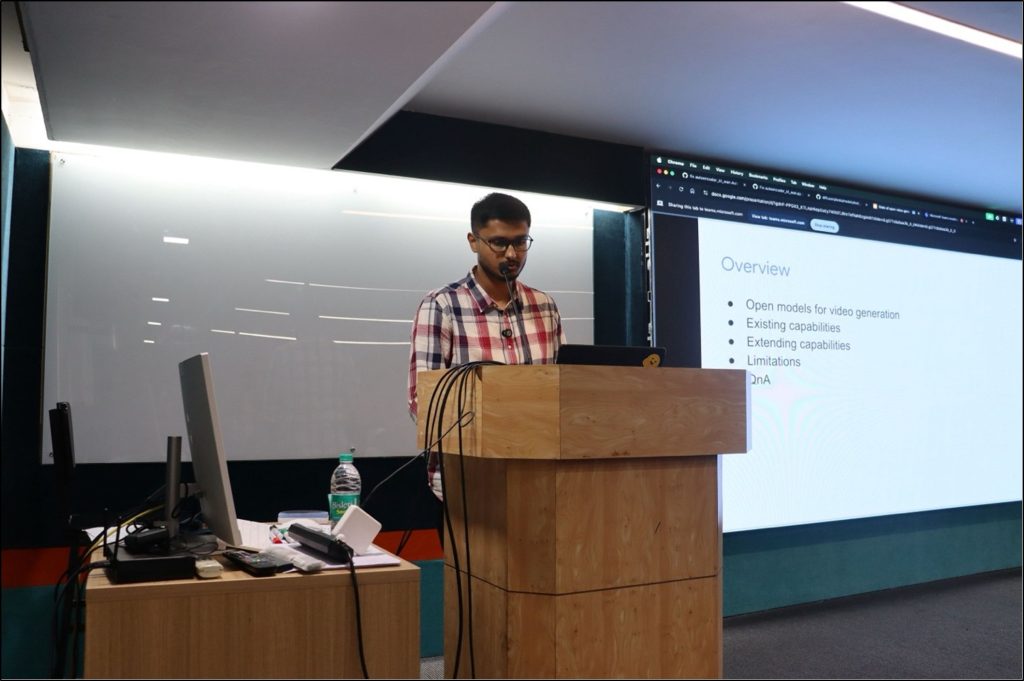

State of Open Video Generation Models

Speaker: Sayak Paul, Hugging Face

Date: 17 September 2025

In his talk on ‘State of Open Video Generation Models’, Sayak Paul gave an overview of the outputs that are possible with open video generation models (OVGMs), their existing capabilities and how these capabilities can be extended, and their limitations and how these limitations can be handled.

The recent achievements of OVGMs are huge. For example, models such as ‘Sora’ can interpret the hierarchy and associations present in between the different subjects presented in prompts. The popular OVGMs include LTX, Wan 2.2, and Hunyuan. Currently, Wan 2.2 is the most state-of-the-art model for video generation, both in terms of quality and quantity.

The capabilities of recent video generation models are not limited to text-to-video, but comprise image-to-video generation, multiple images to video generation, frame interpolation between the first and last frames, video-to-video synthesis, and pose to video generation. The speaker explored use cases of these capabilities.

OVGMs come in all shapes and sizes, with different memory and licensing. Sayak Paul discussed open models that are based on diffusion/flow-matching. These models are not single models and are composed of different components.

Since video models learn better priors of the world, they can be repurposed for image generation. They can also be used to prepare image editing data and training new video effects such as ‘cakeify’ and ‘3D dissolve’.

How does one make the model aware that something is a deformity and that the deformity should not be prioritised in the video? How does one evaluate and ensure that the model does not violate physical laws? During pretraining, the order of things that the model needs to learn is exhaustive. The model seems to prioritise fidelity, object accuracy, and motion. If the pretraining data does not have proper shot, proper camera angle, and physical law obeying videos, nothing much can be done. It is usually in the post-training stage that the model focuses on aspects such as prompt following ability, video length extension, and physical law obeying ability.

How does one use these models in production? As a researcher or practitioner, how does one interact with different kinds of models in a consumer-accessible way? Diffusers is a library that provides unified access to video generation and image generation models that are available in the community. It is made accessible through various offloading strategies with different trade-offs, easy support for quantisation with multiple back-ends, and support for memory-efficient attention techniques.

Though there has been much progress in video generation models, there are some limitations.

- Inadequate prompts: how does one create a caption that faithfully represents the different aspects expected in a video? For example, details of the shot, subject, expression, appearance, action, motion dynamics, lighting, and video duration.

To address this issue, structured prompts can be introduced, which give all the details. However, some users may use derived captions. Hence, the model can be pretrained on both structured (90%) and derived (10%) prompts.

SKYREELS – V2 is a good example of how these problems can be approached in multiple stages.

- Structured data processing pipeline with detailed captioning

- Multiple post-training stages (including reinforcement learning) to improve overall quality including motion and artifacts

- Diffusion forcing for video length

- Video models are heavy and expensive to run even when the video length is small.

Engineering-level solutions (kernels, quantisation), to address this issue, can go very far but are still constrained by the total available accelerator memory. Here, algorithmic approaches are beneficial. Operating on a very (spatio–temporally) compressed latent space is helpful; it reduces computational costs but also results in information loss that can be addressed by tasking the decoder to perform the last denoising step, decoding the latents into pixels.

I joined the BTech programme in Mathematics and Computing at the Indian Institute of Science in 2022. My faculty advisor, Professor Viraj Kumar, has guided my academic journey, and I had the opportunity to work under his mentorship during the summer of 2024.

I joined the BTech programme in Mathematics and Computing at the Indian Institute of Science in 2022. My faculty advisor, Professor Viraj Kumar, has guided my academic journey, and I had the opportunity to work under his mentorship during the summer of 2024.

I joined BTech at IISc in 2024.

I joined BTech at IISc in 2024.

I joined the BTech programme in 2024. My faculty advisor is Professor Viraj Kumar. I am interested in computer science and would like to do research in computer science in the future.

I joined the BTech programme in 2024. My faculty advisor is Professor Viraj Kumar. I am interested in computer science and would like to do research in computer science in the future. Anmol Gill pursues the MTech (Artificial Intelligence) programme at IISc, in the Robert Bosch Centre for Cyber-Physical Systems (

Anmol Gill pursues the MTech (Artificial Intelligence) programme at IISc, in the Robert Bosch Centre for Cyber-Physical Systems (

I recently completed my BTech in August 2024 from the Vellore Institute of Technology, Chennai, with a major in Electronics and Communication Engineering. From May 2024, I am a research intern, supported by the Kotak-IISc AI/ML Centre, in the Human-Interactive Robotics (HiRo) Lab at the Robert Bosch Centre for Cyber-Physical Systems, IISc. I am working under the guidance of Professor Ravi Prakash. My essential area of interest lies in robot learning, which lies under the general area of AI and robotics.

I recently completed my BTech in August 2024 from the Vellore Institute of Technology, Chennai, with a major in Electronics and Communication Engineering. From May 2024, I am a research intern, supported by the Kotak-IISc AI/ML Centre, in the Human-Interactive Robotics (HiRo) Lab at the Robert Bosch Centre for Cyber-Physical Systems, IISc. I am working under the guidance of Professor Ravi Prakash. My essential area of interest lies in robot learning, which lies under the general area of AI and robotics.